Revolutionizing ML Testing Automation: A 10x Efficiency Breakthrough

Introduction: The Industry Context

The rapid adoption of Machine Learning (ML) applications is transforming industries, from finance and healthcare to retail and automation. However, with this rapid adoption comes a major challenge: ensuring model accuracy, robustness, and scalability through rigorous testing. Testing ML applications demands robust methodologies to validate models across diverse datasets, ensuring their accuracy and reliability.

Key Industry Statistics:

- 40% of ML development time is spent on testing and debugging models (McKinsey & Co.).

- UI-based testing can take 5–10x longer than automated, API-driven approaches.

- ML applications require continuous testing, but traditional methods struggle to keep up with rapid iterations.

Key Challenges in Traditional ML Testing

| Challenge | Impact |

|---|---|

| Time Taking Process | Manually inputting test data through UI is inefficient and time-consuming. |

| Scalability Issues | Running large-scale tests across multiple accounts and datasets has many practical challenges. |

| High Costs | Manual and UI-based testing result in higher expenses. |

| Limited Scenario Coverage | Edge cases are often missed, leading to potential failures in real-world applications. |

| Inconsistent Results | Human errors and test environment limitations cause variability in test outcomes. |

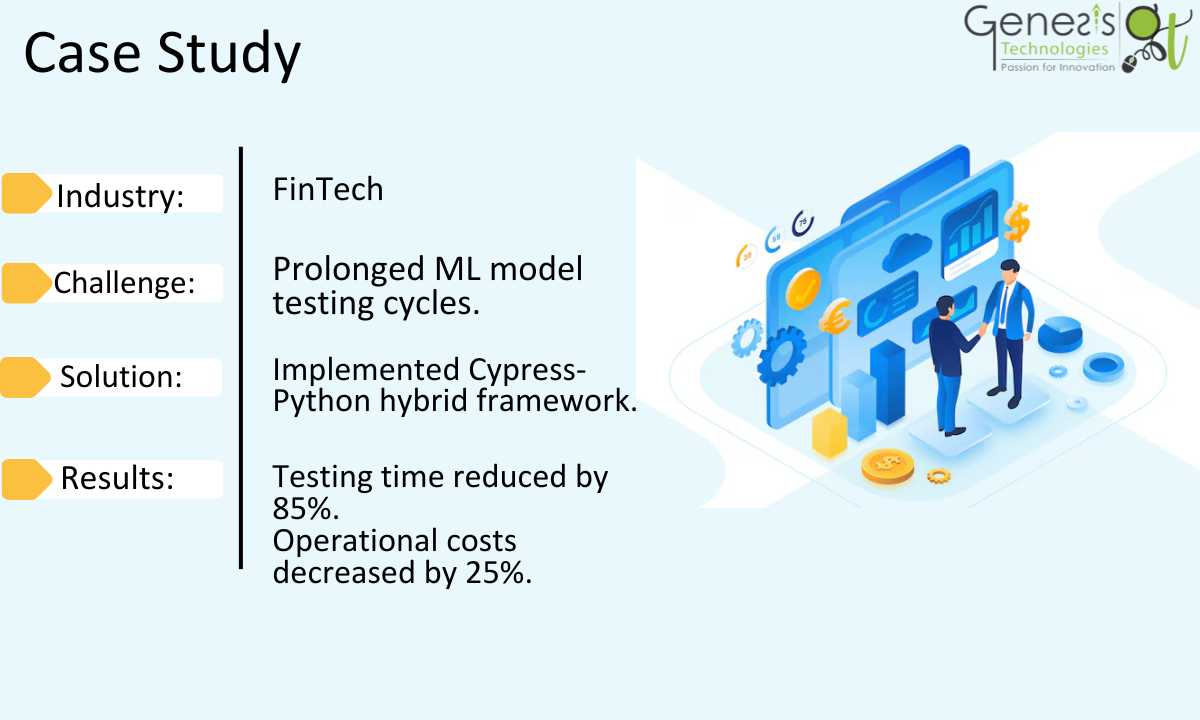

To address these issues, we developed a cutting-edge testing automation solution that reduces testing time by 10x while improving scalability, accuracy, and cost efficiency.

The Testing Landscape in Machine Learning

Traditional Testing Methodologies and Their Limitations

- UI-Based Data Generation: Slow, repetitive, and prone to human error.

- Manual Scenario Testing: Lacks scalability, making it inefficient for iterative model improvements.

- Multi-Account Complexity: Managing multiple user sessions and testing across environments is challenging.

The Cost of Inefficient Testing

- Delays in Deployment: Prolonged test cycles slow down product releases.

- Resource Wastage: Engineers spend significant time on repetitive tasks instead of innovation.

- Compromised Model Performance: Inadequate test coverage leads to unreliable ML predictions.

Our Innovative Solution

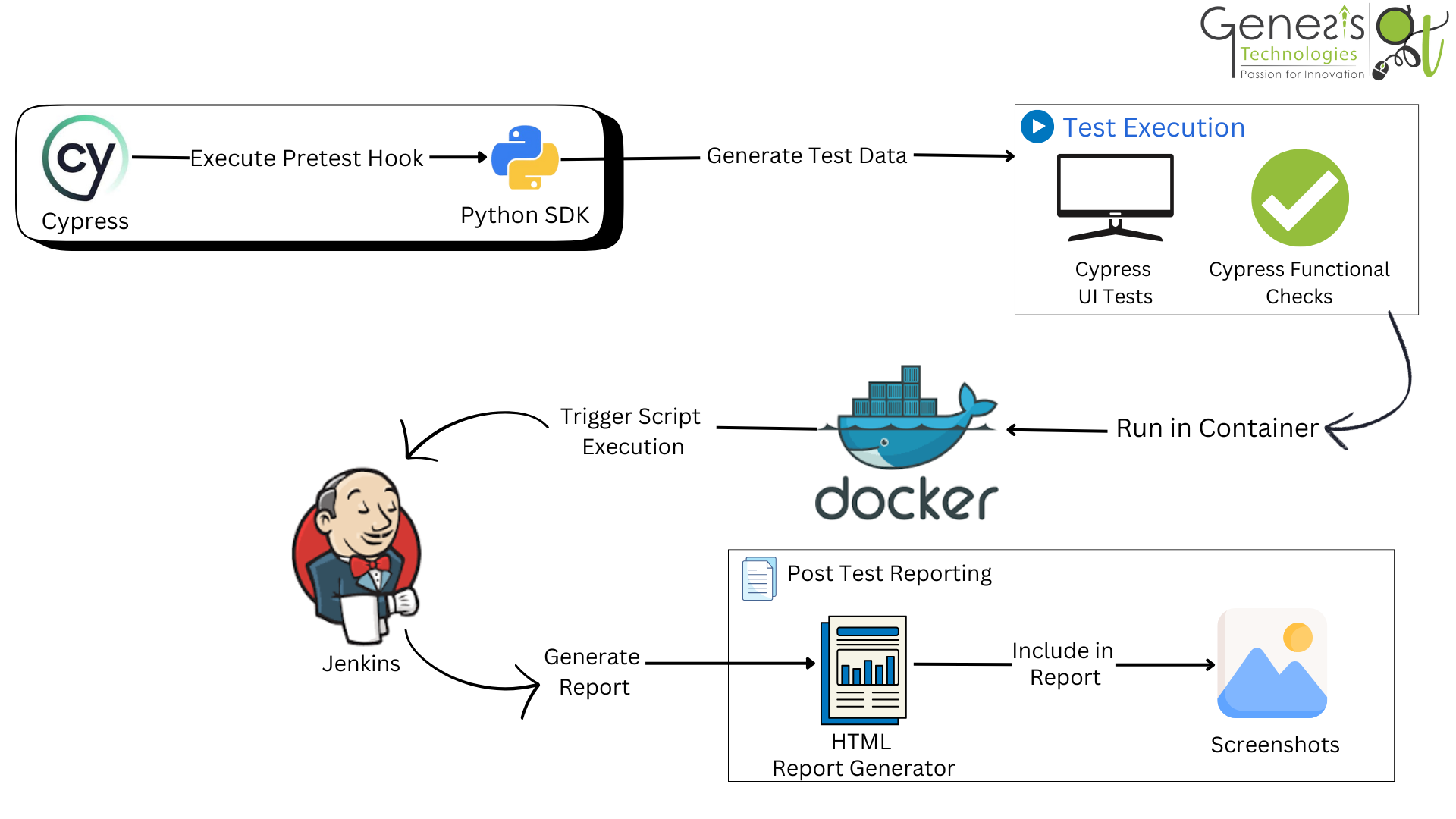

We implemented a hybrid testing framework that integrates Cypress, Python SDK scripts, and containerization, revolutionizing ML testing by drastically improving efficiency and scalability.

Key Features of Our Solution

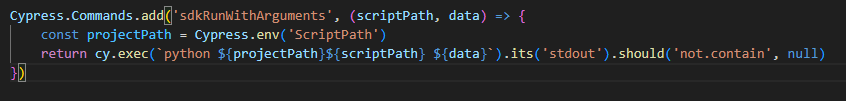

- Cypress for Automation: Acts as the central test framework, triggering Python SDK scripts for real-time data generation.

- Python SDK for Intelligent Data Generation: Bypasses UI interactions, enabling programmatic data to create accurate data consistently in seconds.

- Containerization for Scalability: Ensures a consistent testing pipeline is containerized for seamless deployment across environments.

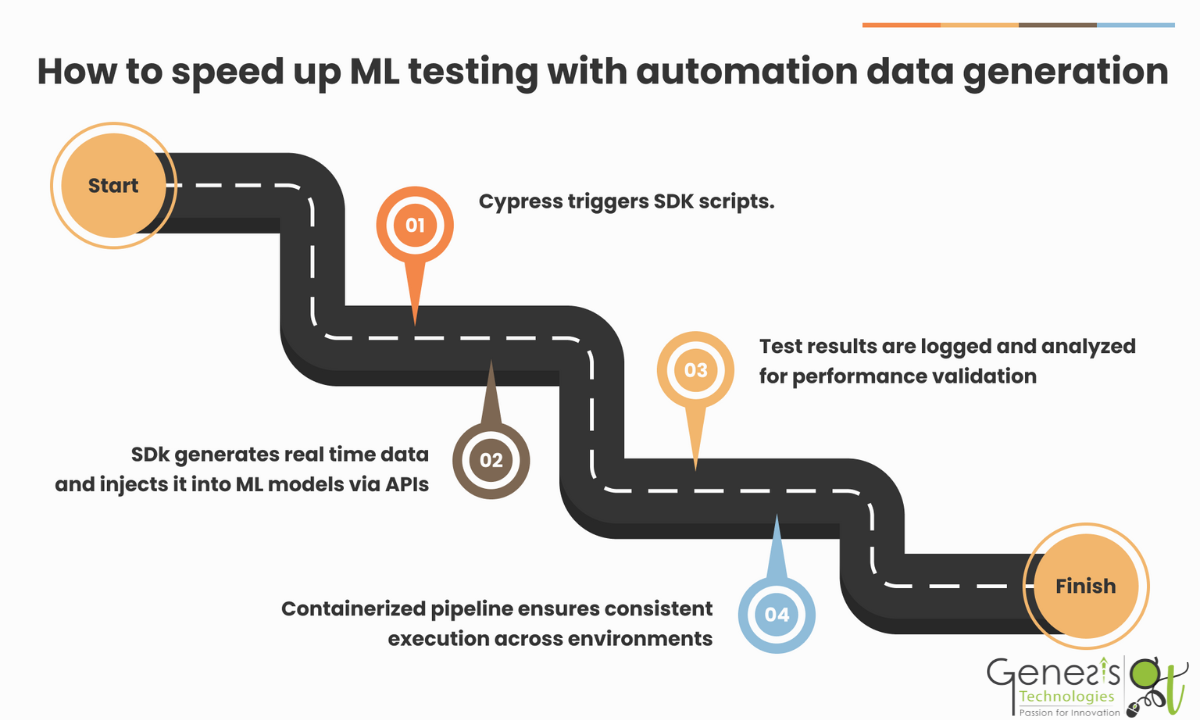

How It Works

- Step 1: Cypress triggers Python SDK scripts to generate test data dynamically.

- Step 2: The SDK injects real-time data directly into ML models via APIs.

- Step 3: The system validates model outputs, ensuring correctness and performance consistency.

- Step 4: The entire workflow runs in containerized environments, making it scalable and repeatable.

Technical Architecture

Solution Components

- Cypress Test Framework: Orchestrates automation and integrates with external libraries.

- Python SDK: Generates and feeds structured data directly into ML applications.

- API Integration: Facilitates seamless interaction between test scripts and ML models, ensuring real-time testing and scenario validation.

- Containerization Strategy: Encapsulates the pipeline for cross-platform deployment.

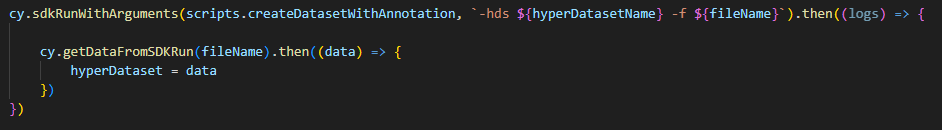

SDK Script Using Cypress Commands:

SDK Script in Test Case:

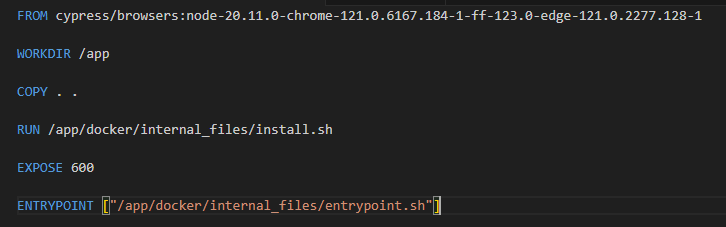

DockerFile:

Implementation Approach

Methodology

- Hybrid Automation: combines UI capabilities of Cypress with the efficiency of programmatic Python SDK execution.

- Real-Time Data Integration: Enables dynamic scenario testing with real-world-like datasets.

- Scenario Adaptation: Adjusts test parameters dynamically to cover edge cases to achieve improved test coverage.

Technical Innovations

- SDK-driven multi-strategy data creation.

- Automated API and database interactions for seamless testing workflows.

- Containerized execution for consistency across testing environments.

The End Result

Quantifiable Benefits

Performance Metrics:

| Metric | Before (Traditional UI-Based) | After (Cypress + SDK) |

|---|---|---|

| Testing Time Cost per Test Cycle | 10 hours per test cycle $10,000 | 1 hour per test cycle $7,000 |

| Scalability | Limited | Highly Scalable |

| Bug Detection Speed | Slow | Fast (real-time insights) |

| Test Coverage | Low-Medium | Comprehensive |

Future Outlook

Emerging Trends in ML Testing

- AI-Driven Test Automation: Using AI to predict and generate test scenarios dynamically.

- Predictive Testing: Leveraging ML to detect potential failure points before deployment.

- Cloud-Native Scalability: Enhanced integration with AWS and GCP for limitless testing capabilities.

Conclusion: Transforming ML Testing with Innovation

Our approach to ML testing automation has set a new standard for efficiency, scalability, and cost savings. By integrating Cypress, Python SDK, and containerization, we have dramatically accelerated testing cycles while ensuring model robustness.

Call to Action

🚀 Struggling with ML Testing? Let Genesis Technologies Help.

We specialize in cutting-edge testing solutions that accelerate ML testing, reduce costs, and enhance model accuracy.

🔗 Schedule a demo today! Visit Genesis Technologies to learn more.